In this guideline, a number of measures are described that can be used in, or be of influence on, the development

tests. Most of these measures have consequences for the way in which the unit test and/or the unit integration test is

carried out, apart from the code review which is an addition to the development tests and has no direct influence on

these. It depends on the situation whether any of these measures, and if so which ones, will be chosen. For that

reason, they are not included in the development test activities, but are briefly described below. The fact that they

are optional emphatically does not mean that they only have limited advantages.

On the contrary, appropriate application of the measures in the right context can deliver huge advantages.

The following measures are discussed in succession:

-

Test Driven Development (TDD) - TDD is a development method that strongly influences the UT,

because it presupposes automated tests and ensures that test code is present for all the (source) code.

-

Pair Programming - A development method in which two developers work on the same software and

also specify and execute the unit test in mutual co-operation.

-

Code review - This evaluation of the code supplements the development tests.

-

Continuous Integration - An integrative approach that requires automated unit and unit

integration tests, minimising the chance of regression faults.

-

Selected quality of development tests - This approach is closely connected with, and forms part

of, the test strategy. The choices made exert a strong influence on the method of specifying and executing the UT

and UIT.

-

Application integrator approach - An organisational solution for achieving a higher quality of

the UT and UIT.

Test Driven Development (TDD)

Test Driven Development, or TDD, is one of the best practices of eXtreme Programming (XP). TDD has a lot of

impact on the way in which development testing (also outside of XP, particularly with iterative and agile development)

is organised these days. It is an iterative and incremental method of software development, in which no code is written

before automated tests have been written for that code. The aim of TDD is to achieve fast feedback on the quality of

the unit.

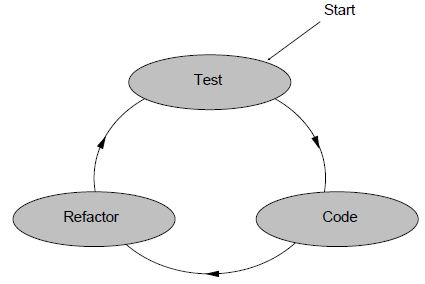

Figure 1: Test Driven Development

Development is carried out in short cycles, in accordance with the above scheme:

-

Creating a test

-

Write a test - TDD always begins with the writing of test code that checks a particular property of the

unit.

-

Run the test (with fault) - Execute the test and check that the result of the test is negative (no code has

yet been written for that piece of functionality).

-

Encode and test

-

Write the code - Write the minimum code required to ensure that the test succeeds. The new code, written at

this stage, will not be perfect. This is acceptable, since the subsequent steps will improve on it. It is

important that the written code is only designed to allow the test to succeed; no code should be added for

which no test has been designed.

-

Execute the test and all the previously created tests - If all the test cases now succeed, the developer

knows that the code meets the requirements included in the test.

-

Refactoring

-

Clean up and structure the code - The code is cleaned up and structured without changing the semantics. The

developer regularly executes all the test cases. As long as these are successful, the developer knows that

his amendments are not damaging any existing functionality. If the result of a test case is negative, he

has made a wrong change.

The application of TDD has a number of big advantages. It leads to:

-

More testable software - The code can usually be directly related to a test. In addition, the automated tests can

be repeated as often as necessary.

-

A collection of tests that grows in step with the software - The testware is always up to date because it is linked

one-to-one with the software.

-

High level of test coverage - Each piece of code is covered by a test case.

-

More adjustable software - Executing the tests frequently and automatically provides the developer with very fast

feedback as to whether an adjustment has been implemented successfully or not.

-

Higher quality of the code - The higher test coverage and frequent repeats of the test ensure that the software

contains fewer defects on transfer.

-

Up-to-date documentation in the form of tests - The tests make it clear what the result of the code should be, so

that later maintenance is made considerably easier.

The theory of TDD sounds simple, but working in accordance with the TDD principles demands great discipline, as it is

easy to backslide: writing functional code without having written a new test in advance. One way of preventing this is

the combining of TDD and Pair Programming (discussed later); the developers then keep each other alert.

TDD has the following conditions:

-

A different way of thinking: many developers assume that the extra effort in writing automated tests is

disproportionate to the advantages it brings. It has been seen in practice that the extra time involved in test

driven development is more than made up for by the gains in respect of debugging, changing the software and

regression testing.

-

A tool or test harness for creating automated tests. Test harnesses are available for most programming languages

(such as JUnit for Java and NUnit for C#).

-

A development environment that supports short-cycle test-coderefactoring.

-

Management commitment to giving the developers enough time and opportunity to gain experience with TDD.

For more information on TDD, refer to [Beck, 2002]

TDD strategy for testing GUI: TDD also has its limitations. One area where automated unit tests are difficult to

implement is the Graphical User Interfaces (GUI). However, in order to make use of the advantages of TDD, the following

strategy may be adopted:

-

Divide the code as far as possible into components that can be built, tested and implemented separately.

-

Keep the major part of the functionality (business logic) out of the GUI context. Let the GUI code be a thin layer

on top of the stringently tested TDD code.

Pair Programming

Pair Programming is another best practice of eXtreme Programming (XP) that is also popular outside of XP. With Pair

Programming, two developers work together on the same algorithm, design or piece of code, side by side in the same

workplace. There is a clear division of roles. The first developer is the one who operates the keyboard and actually

writes the code. The second developer checks (evaluates!) and thinks ahead. While the code is being written, he is

thinking about subsequent steps. Defects are quickly observed and removed. The two developers regularly swap

roles.

The significant advantages of Pair Programming are:

-

Many faults are caught during the typing, rather than during the tests or in use

-

The number of defects in the end product is lower

-

The (technical) designs are better and the number of code lines is lower

-

The team solves problems faster

-

The team members learn considerably more about the system and about software development

-

The project ends with more team members understanding all parts of the system

-

The team members learn to collaborate and speak to each other more often, resulting in improved information fl ows

and team dynamic

-

The team members enjoy their work more.

In recent decades, this method has been cited at various times as a better way of developing software. Research has

demonstrated that by deploying a second developer in Pair Programming, the costs do not rise by 100%, as may be

expected, but only by around 15% [Cockburn, 2000]. The investment is recouped in the later phases as a result of the

shorter and cheaper testing, QA and management.

Code review

A subsequent measure for increasing the quality of the developed products is an evaluation activity: the code

review.

The code review can be carried out as a static test activity within development testing. Its aim is to ensure that the

quality of the code meets the set functional and non-functional requirements.

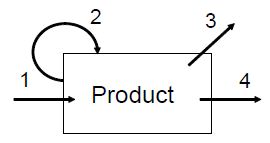

In the code review, the following points can be checked, independently of the set requirements; see also figure 2:

-

Has the product been realised in accordance with the assignment? For example, are the requirements laid down in the

technical design realised correctly, completely and demonstrably?

-

Does the product meet the following criteria: internally consistent, meeting standards and norms and representing

the best possible solution? ‘Best possible solution’ means the ‘best solution’ that could be found within the given

preconditions, such as time and finance.

-

Does the product contribute to the project and architecture aims? Is the product consistent with other, related

products (consistency across the board)?

-

Is the product suitable for use in the next phase of the development (integration)?

Figure 2: The 4 types of review goals and questions

For the review, various techniques can be employed; see also Evaluation Techniques.

The code review is also applicable to development environments and development tools that are mainly used for

configuration (rather than coding, e.g. with package implementations). In that case, the parameter settings are the

subject of the review.

When carrying out the code review, allowance should be made for any overlap with the use of code-analysis tools; see Test Tools For Development Test. These tools are increasingly being included in the

automated integration process, in which they perform all kinds of static analyses and checks automatically. The code

review therefore does not have to focus on these. It is advisable to include the output of the tools in the code review

report.

Continuous Integration

Continuous Integration has a particular influence on the organisation of the unit integration tests. It is a

way of working in which the developers regularly integrate their work, at least on a daily basis and increasing to

several integrations per day. The integration itself, consisting of combining the units and compiling and linking into

software, is automated. Each integration is verified by executing the automated tests in order to fi nd integration

defects as quickly as possible. The method minimises the chances of regression faults. Continuous Integration ideally

lends itself to combining with Test Driven Development, requiring a development environment that supports automated

integration and testing.

Selected quality of development tests

An important reason for development testing is the meeting of the obvious expectation of the recipient party

(client, project, system test, etc.) i.e. that the developed software ‘simply’ works. If many defects occur in the

delivered software, this will cost (a lot of) time and money to solve. The developers get the blame for this and are

accused of operating unprofessionally.

But what is obvious quality to the client(s)? It is wise to make these seldom-expressed expectations explicit. They can

be roughly divided into obvious expectations in respect of skill, and obvious expectations in respect of product

quality.

In order to simplify the inventory, a summary of possible expectations is shown below.

-

Obvious quality of the product:

-

Good is good enough - The delivered product is not required to be perfect, but should be good enough to be

transferred to the next phase (of testing). What often happens is that developers test the first units

(too) thoroughly. They then come under pressure of time with later units and then do not test thoroughly

enough.

-

Once good, always good - Changes to the product should not lead to lower quality of the total product,

therefore regression faults are not tolerated.

-

Processing of the most normal cases works flawlessly.

-

Basic user-friendliness (e.g. standard validations, technical consistency, uniformity).

-

Obvious skill:

-

Basic knowledge of working in projects

-

Knowledge of the delivered quality

-

Obligation to obtain confirmation of the assumptions (interpretations of the specifications)

-

Obligation to signal “known errors” and/or "delay reports"

-

Awareness of own inexperience and/or incapacity

-

Optimum deployment of the available tools/facilities

-

Enlisting support when in doubt.

Obvious product quality is important, but particularly difficult to establish. The product quality to be delivered is

usually determined by the project, for which the developers work. This project, after all, has the purpose of

delivering a product that works satisfactorily within a certain time and budget.

However, there is a footnote to this. What does the developer do if a project sets no specific requirements on the

quality of the delivered software, neither in the master test plan nor as suggested by the need for haste? Or even

requests a “panic” delivery of the barely tested software? Are no development tests then carried out? At first sight,

it seems acceptable to then leave out the development tests. If the project issues the order, delivery may take place

without testing … however, experience teaches that it is extremely unwise to carry out no, or inadequate, development

tests. Although the project will make the milestone at that point, a time will come during the system test or

acceptance test, or even worse - in production, when the defects will stream in nevertheless. In the end, this reflects

badly on the developers.

For that reason, the development department, for which the developers work, also bears a responsibility here. It can

instruct that, irrespective of the project pressures, the developers should always deliver a consciously selected basic

quality at minimum.

If the basic quality is clearly established, attention need only be paid in the formulation of the development testing

task within a project to those parts of the system in which a higher level of certainly is required.

To this end, the developer (development department) should have the depth, clarity, provision of proof and compliance

monitoring of the development tests established. An important decision to be made is the required degree of proof of

the testing. How much certainty is required that the tests have actually been executed entirely in accordance with the

agreed strategy? And how much time and money can be spared for providing this proof? Increasingly, external partners,

too (e.g. supervisory bodies) are setting requirements on the proof to be supplied.

Example 1

Within an organisation, basic quality is defined as follows:

-

Depth of test coverage - The basic depth of test coverage is when all the statements in the realised software have

been touched on in a development test at least once (statement coverage).

-

Clarity - Enumeration of the situations under test with reference to the development basis (requirements,

specifications, technical design), with indication of whether the test of the situation takes place in the code

review, unit test or unit integration test.

-

Provision of proof - In the list of situations under test, initialling to indicate what has been tested and by

whom, without explaining how the testing was done.

-

Compliance monitoring - Code reviews (random checks).

In addition to the basic quality, the development department may also opt for a model with several quality levels. This

makes it easier for projects to make variations on the quality to be delivered.

Example 2

Over and above basic quality, an organisation defines three other quality levels. The basic quality is given the label

of bronze and the levels above it the labels silver, gold and platinum.

|

|

Bronze

|

Silver

|

Gold

|

Platinum

|

|

Depth

|

Statement coverage

|

Condition/decision coverage

|

Modified condition/decision coverage

|

Multiple condition

coverage

|

|

Clarity

|

Enumeration of the situations to be tested with reference to the test/development basis (requirements,

specifications, technical design), indicating whether the test of the situation takes place in the code

review, unit test or unit integration test

|

Test cases (logical), indicating whether the test takes place in the code review, unit test or unit

integration test

|

Test cases (logical and physical), with indication of whether the test takes place in the code review, unit

test or unit integration test

|

Test cases (logical and physical), with indication of whether the test takes place in the code review, unit

test or unit integration test

|

|

Proof

|

Initialled checklists

|

Test reports

|

Test reports + proof

|

Test reports + proof

|

Compliance

monitoring

|

Code reviews and internal monitoring of test results (random checks)

|

Code reviews and internal monitoring of test results

|

Code reviews, internal monitoring of test results and random external audit checks

|

Code reviews, internal monitoring of test results and external audit

|

With the creation of several quality levels, a situation arises in which the client chooses the required quality for

the various parts of the system, and the development department hangs a price tag on each quality level. In short, a

first step towards negotiable selected quality. The selected quality is an agreement in respect of the formulation

of the development tests in connection with the clarity, depth and proof of the executed tests.

Proof

There are several possibilities for demonstrating that the required quality has actually been delivered

(on time). Firstly, there is the provision of proof option as established in the development testing strategy, with the

exit and entry criteria. These should be met before the next test level starts. The project manager may also require

additional proof in order to monitor specific project risks. The decision regarding (additional) proof involves

weighing up experience, risks and associated costs. Possible forms of proof, which can often be combined, are:

-

Marked/initialled test basis - Initialling whatever has been tested in the development basis (requirements,

functional design, use case descriptions and/or technical design), without indicating how the testing was done.

-

Initialled checklist - Deriving a checklist from the test basis (e.g. requirements, use case descriptions and/or

technical design) and initialling what has been tested, without indicating how the testing was done.

-

Test cases - Test cases created using particular test design techniques with selected depth of coverage.

-

Test cases + test reports - As above, plus a report of which test cases have been executed, with what result.

-

Test cases + test reports + proof - As above, plus proof of the test execution in the form of screen and database

dumps, overviews, etc.

-

Test coverage tools (tools for measuring the degree of coverage obtained) - The output of such tools shows what has

been tested, e.g. what percentage of the code or of the interfaces between modules has been touched on. This can be

a part of the build report.

-

Automated tests - The automated execution of tests in the new environment (e.g. system or acceptance test

environment) very quickly demonstrates whether the delivered software is the same as the software that has been

tested in the development tests and whether the installation has proceeded correctly.

-

Demonstration - Demonstrating that the (sub)functionality and/or the chosen architecture works according to the set

requirements.

-

Test Driven Development compliance report - By means of review reports, it can be demonstrated that the guidelines

concerning the application of TDD have been met.

It should also be agreed how the reporting should be done. Perhaps a separate test report is to be delivered, or it may

form a part of regular development reports.

Application integrator approach

If both the unit test and the unit integration test are employing a structured development test method, they

should obviously be aligned, so that there are neither unnecessary overlaps nor gaps in the overall coverage of the

development tests. A practical method is described below for simplifying this coordination, clearly stating the test

responsibilities and offering an easy aid to the structuring of the development testing.

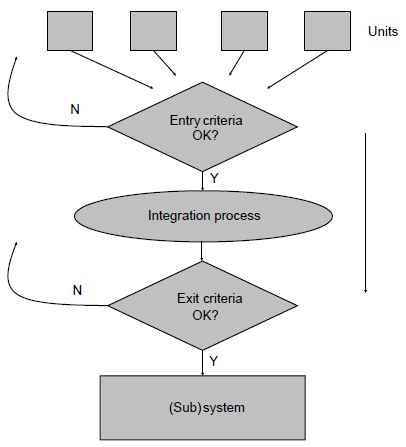

In this approach, an application integrator (AI) is given responsibility for the progress of the integration process

and for the quality of the outgoing product. The AI consults with his client (the project manager or development team

leader) concerning the quality to be delivered: under what circumstances may the system or subsystem be released to a

subsequent phase (exit criteria). The AI also requests insight into the quality of the incoming units (entry criteria),

in order to establish whether the quality of these products is sufficient to undergo his own integration process

efficiently. A unit is only taken into the integration process if it meets the entry criteria. A (sub)system is issued

if it (demonstrably) meets the exit criteria (see figure 3).

Figure 3: Entry and exit criteria

It should be clear that the proper maintenance of exit and entry criteria has a great impact on (obtaining insight

into) the quality of the individual units and the final system. Testing is very important for establishing these

criteria, since parts of a criterion consist of, for example, the quality characteristic under test, the required

degree of coverage, the use of particular test design techniques and the proof to be delivered. The entry and exit

criteria are therefore used in determining the strategy of the unit test and the unit integration test. This method of

operation also applies when the integration process consists of several steps, or in the case of maintenance.

To avoid conflicts of interest, ideally the AI does not simultaneously fill the role of designer or development project

leader. This deliberately creates a tension between the AI, who is responsible for the quality, and the development

project leader, who is judged in particular on aspects such as the delivered functionality, lead-time and budget spend.

The role of the AI is described in Roles Not Described As A Position.

Salient measures in the approach are:

-

A conscious selection is made of the quality to be delivered and the tests to be executed before delivery to

the following phase (so that insight is also obtained into the quality).

-

The tests carried out by the developers become more transparent.

-

Besides final responsibility on the part of the project or development team leader, the responsibility for the

testing also lies with an individual inside the development team.

Implementations of this approach have shown that the later tests will deliver a lower number of serious defects.

Another advantage of the approach is that earlier involvement of the system test and acceptance test is possible. Since

there is improved insight into the quality of the individual parts of the system, a more informed consideration of risk

can take place as regards earlier execution of certain tests. An example of this is that the acceptance test already

evaluates the screens for user-friendliness and usability, while the unit integration test is still underway. Such

tests are only useful when there is a reasonable degree of faith in the quality of these screens and the handling of

them.

|